As 2023 unfolds, the generative AI space is buzzing with a vibrant medley of cultural moments, echoing throughout the music industry like an electrifying riff. Alt-pop cyborg Grimes, for instance, boldly invited fans to remix her voice, while an AI-fueled deepfake collaboration between Drake and The Weeknd emerged from the digital shadows. Opinions, like contrasting musical genres, are diverse. Some in my circle envision a dystopian future where musicians are out of work—although, I contend that this dystopian idea has long been upon us. Stories such as these, understandably fuel concerns that creativity's curtain call is imminent.

As a lifelong music enthusiast and a producer since 2013, blending music with cutting-edge technology has been my passion. When I first discovered Jukebox and Musenet in 2020, the idea of generating music from trained data struck me like a thunderous crescendo. Holly Herndon's 2021 release of Holly+, her AI-trained digital twin, only amplified my awe. By the summer of 2022, as I tinkered with Stable Diffusion and Midjourney for image generation and IRCAM RAVE for sound, it dawned on me— these innovative tools hold the potential to enhance and amplify an artist's creative expression.

As an eternal optimist, I propose this thesis: Generative AI is revolutionizing the music industry by expanding creative possibilities, democratizing the art, and making advanced tools more accessible to musicians. Rather than predicting the future, I seek to explore the vast array of possibilities that lie on the horizon.

That said, I'm putting a pin in the challenging questions of copyright and intellectual property for now. These issues are crucial, undoubtedly, but they warrant separate, in-depth analysis that my peers, more versed in those areas, can tackle with confidence. For anyone keen on diving into those discussions, you can check out these resources here , here and here.

New Sounds and Exploration of Latent Space

As an artist, I've always been drawn to discovering fresh sounds and rhythms. Throughout my creative journey, I've ventured into new territories, from drum loops inspired by 70s funk records to innovative sound techniques using the popular wavetable synth, Serum. These sounds don't necessarily have to be groundbreaking in a cultural sense; they just need to be novel to my ears.

I collect records for both personal enjoyment and artistic inspiration, often sampling them in my work. This process of sifting through old records for samples is known as "crate digging." In today's ever-evolving world, I believe we can also "crate dig" in the latent space (latent space in AI is like a blueprint of all possible combinations, from which new unique creations like images, words, or ideas can be generated). Here, I can generate intriguing sounds to curate and incorporate into my projects. Some are coherent, while others are delightfully bizarre. (edit: “crate digging in latent space” is an idea originally seeded by the artist patten)

Here’s a riff I’ve generated from Never Before Heard Sounds. This sounds like a starting point for a lofi beat.

To me, this feels deeply creative, like having a jamming partner endlessly supplying me with fresh inspiration. Creative technologists Holly Herndon and Mat Dryhurst have coined the term "Spawning" to describe this concept:

We propose a term for this process, Spawning, a 21st-century corollary to the 20th-century process of sampling. If sampling afforded artists the ability to manipulate the artwork of others to collage together something new, spawning affords artists the ability to create entirely new artworks in the style of other people from AI systems trained on their work or likeness.

Source: Infinite Images and the Latent Camera

UK-based artist patten explores this idea on his latest release, Mirage FM:

Like a crate digger in latent space, patten scoured hours of lo-fi recordings from these sessions for tiny sections that resonated, and used these as building blocks to assemble ‘Mirage FM’. With countless hours of chopping, sequencing, layering, EQ, repitching, and fx, it started to take shape.

Source: Mirage FM Bandcamp

Amid all this, it's crucial to remember that the role of the artist is not diminished but rather redefined. In this new paradigm, artists using these systems still rely on their creative direction, intuition, and input. Just as a skilled DJ might select the perfect record for the moment, artists choosing from the sea of possibilities offered by AI must select the generated audio that resonates with their creative vision and connects with a wider human audience. AI, in this regard, is the new instrument—an instrument that offers limitless possibilities, but which still requires a director’s touch to unlock its full potential.

And just like traditional instruments, this new tool also has its unique tone and character.

AI models possess a distinct sonic personality. Much like the warm analog feel of vinyl and tape, which has evolved into its own aesthetic, music-focused neural networks exude a specific sound: scratchy, occasionally incoherent, and undeniably peculiar. This opens doors for music producers seeking unique elements for their productions. Extreme AI music ensemble, Dadabots elaborates on this idea:

While we set out to achieve a realistic recreation of the original data, we were delighted by the aesthetic merit of its imperfections. Solo vocalists become a lush choir of ghostly voices, rock bands become crunchy cubist-jazz, and cross-breeds of multiple recordings become a surrealist chimera of sound. Pioneering artists can exploit this, just as they exploit vintage sound production (tube warmth, tape-hiss, vinyl distortion, etc).

Source: Generating Black Metal and Math Rock: Beyond Bach, Beethoven, and Beatles (2017)

Here is CJ Carr from Dadabots expounding on new sound design possibilities using neural networks, from CONVO, Ep 2 “Music Creation in the Age of AI”

And here’s virtuoso bass producer Mr. Bill on using generative AI to create really interesting samples.

This view is also shared by AI Audio Researcher, Christian Steinmetz. Here’s Steinmetz’s quote from this must-read article, “AI and Music Making Pt. 1”:

“the growth of a breed of programmer/musician/audio engineer, people that are both into the tech and the music side.” These people will either find creative ways to “break” the AI models available, or “build their own new models to get some sort of new sound specifically for their music practice.” He sees this as the latest iteration of a longstanding relationship between artists and their tools. “Whenever a [new] synthesizer is on the scene, there's always some musicians coming up with ideas to tinker with it and make it their own.”

Source: AI and Music Making Pt. 1

So, as we embrace the partnership of AI, we do so knowing our roles as artists are not displaced, but evolved. Our fingers on the pulse of this technology, we’re no longer just musicians, but explorers of the latent space, crate diggers of a new era. AI, with its peculiar yet captivating tone, stands as a fresh landscape for our creative minds to traverse and unearth unheard sonic treasures. Yet, the excitement doesn’t end here. The dawn of a new question presents itself—what if we could turn these technologies inward, creating an echo chamber that bounces off our existing creations to give rise to something entirely unexpected?

Seeding Inspiration

Have you ever been curious about training an AI model on your work to spin out new versions, as if seeing yourself through a funhouse mirror? I certainly have, and I can assure you, it's not only possible but also quite fascinating.

Last year, I discovered a Colab Notebook that enabled me to play with OpenAI's Jukebox. My mission was simple: use a track I'd previously released as a seed and let the AI model dance around it, generating distinct variations.

Here’s a song I released in 2013, as the basis of this experiment:

And here are the alternate versions generated by the model. The model starts to kick in around the 00:20 second mark:

The results were nothing short of intriguing. The AI, almost like a musical confidante, suggested melodic paths I hadn't treaded on before, triggering a spark of creative surprise. The textures and effects it created, with a standout sound design, were pleasing to my ears, echoing the nostalgia of old tapes or vinyl records.

This short stint with the AI model was akin to a musical jamming session - a harmonious exchange between my earlier work and the model's extensions. As I reflect on this, I'm convinced that as this technology evolves, it could evolve into a powerful idea generator, a creative ally to rescue us from those moments of artistic blockade. Yet, the magic of AI doesn't stop at creation. It can be an accomplice in learning and exploration, too.

A Study Companion

Indeed, a dance with AI, especially with Large Language Models (LLMs), can be an enlightening tango, a powerful duet that illuminates your path through music theory and history. As the melodies evolve, they impart a richer understanding of why certain styles resonate in unique ways, like a musical time travel.

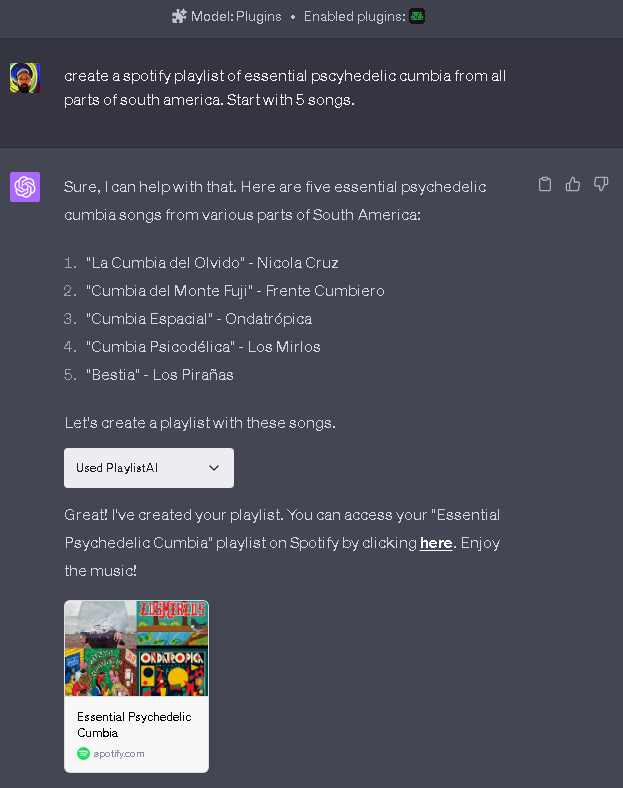

With the recent integration of plugin capability in chatGPT, my conversations have turned into immersive journeys of discovery. One plugin that stands out is the "PlaylistAI" - an AI DJ, if you will. Typically, I'd nudge it to craft a playlist mirroring the style of an artist I admire. It's like a treasure hunt, but instead of a map, you're handed a playlist filled with gems that echo your favorite artist.

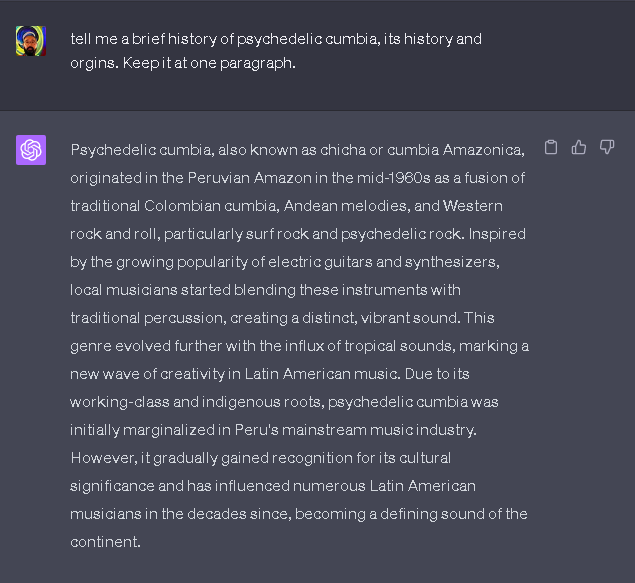

Imagine this: I'm currently mesmerized by the intoxicating rhythm of psychedelic cumbia. With the curiosity of an adventurer, I asked chat GPT to share the history of this fascinating genre:

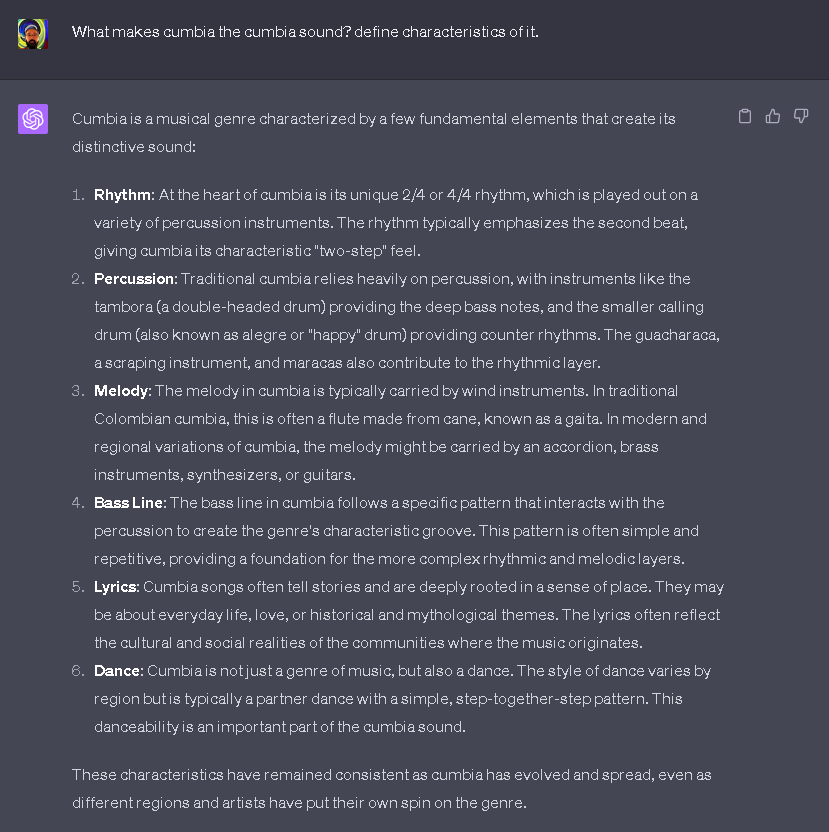

Then, like asking a knowledgeable guide, I requested examples of emblematic pieces:

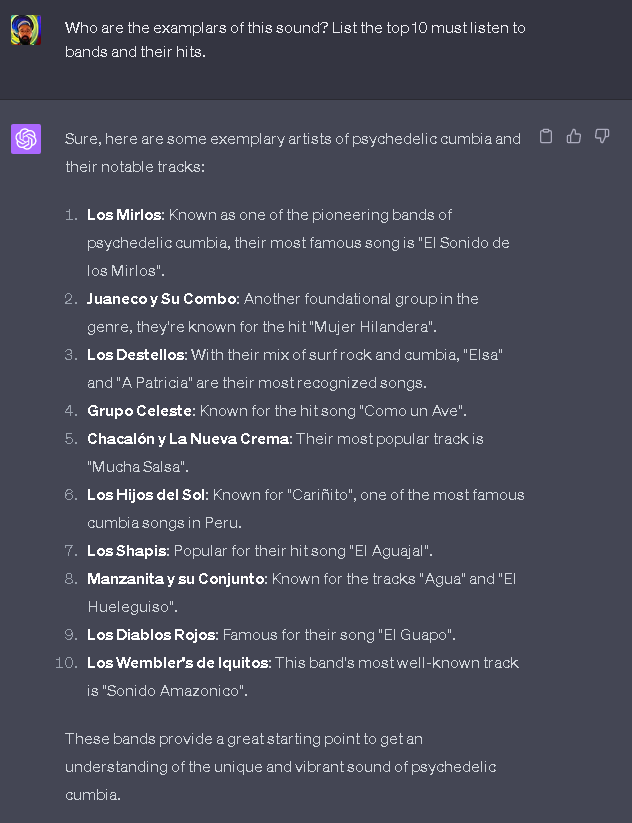

With the magic of the plugin, I asked it to curate a playlist that encapsulates the spirit of psychedelic cumbia:

This was my ticket to tumble further down the psychedelic cumbia rabbit hole. (Here’s the generated playlist if you’re curious.)

Indeed, this powerful AI tool is like a compass for musicians, guiding us to dive into uncharted musical territories beyond our comfort zones. It's a time machine offering us a richer understanding of the musical tapestry that has shaped us. This journey, while being self-directed, opens doors to vibrant new worlds of sound, encapsulating the essence of music that came before us.

And it's not just historical exploration and musical theory where LLM shines. In the realm of creation and collaboration, it can serve as an invaluable partner.

Producer Aries paints this picture in a video, injecting humor into his creation of a song and a genre he cheekily dubs "Aqua-Funk." From the conception of the song idea to choosing the right sounds and crafting lyrics, Aries' accomplice is ChatGPT, a partner in his creative process.

The whole endeavor is a hoot, and while the output may not be chart-topping, it sheds light on the promising potential of ChatGPT. With well-tailored prompts, this AI model can transform into a trove of fresh song ideas, a playground for the imaginative musician.

However, the capabilities of AI extend beyond the auditory realm, branching out into the world of visuals. A seamless integration of sound and sight enhances a musical project, and AI offers a helping hand in this space as well.

Dreaming up images and videos

Imagine being a musician, a weaver of sound, who doesn't quite speak the language of visuals. Enter tools like Midjourney and Stable Diffusion (both are text-to-image diffusion models), offering a helping hand to generate visual assets for your music releases.

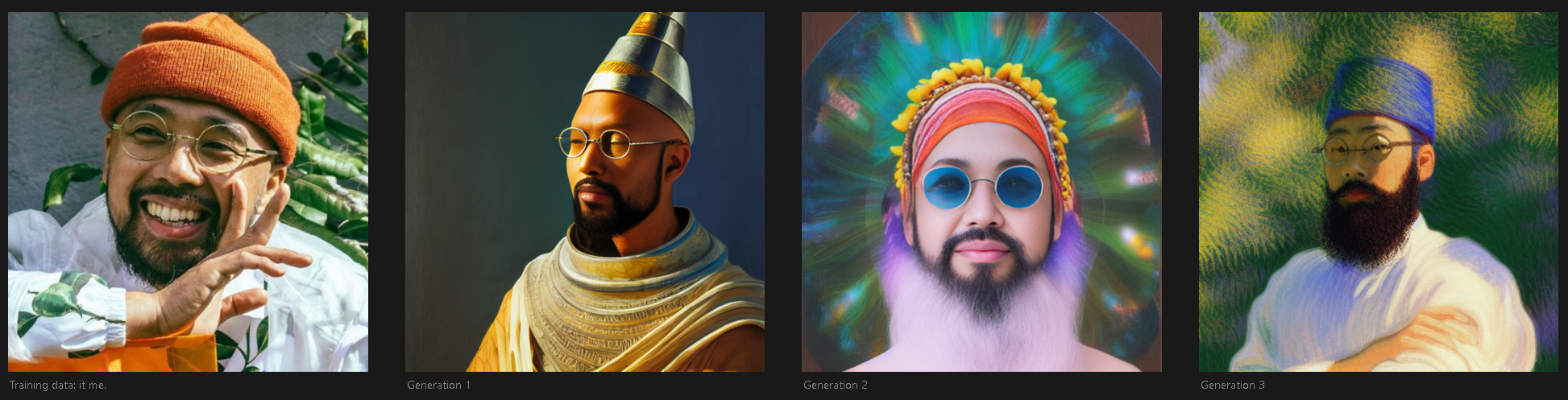

Stable Diffusion, my personal favorite, serves as a digital artist, sketching images or even crafting the very likeness of an artist. It's as if the machine itself is thumbing through an album of your press photos and recreating your image. Here are some digital clones it birthed from my own photographs.

The visuals born from this process become the seeds of an animated video, sprouting within Stable Diffusion using a plugin called Deforum. And the final result? Here's a glimpse:

Midjourney on the other hand conjures up images that are neat and harmonious. Yet, it's capable of spilling into abstract territory, creating visuals that might seem chaotic but are nonetheless captivating. Just take a look at these pieces curated by the art collective, Felt Zine:

And here are a few that I generated:

These visual tools serve as an artistic catalyst, empowering artists to readily experiment with diverse style and aesthetics. When guided by the right prompts, the output doesn't merely reflect your identity; it vibrates in harmony with your music, echoing your unique artistic vision.

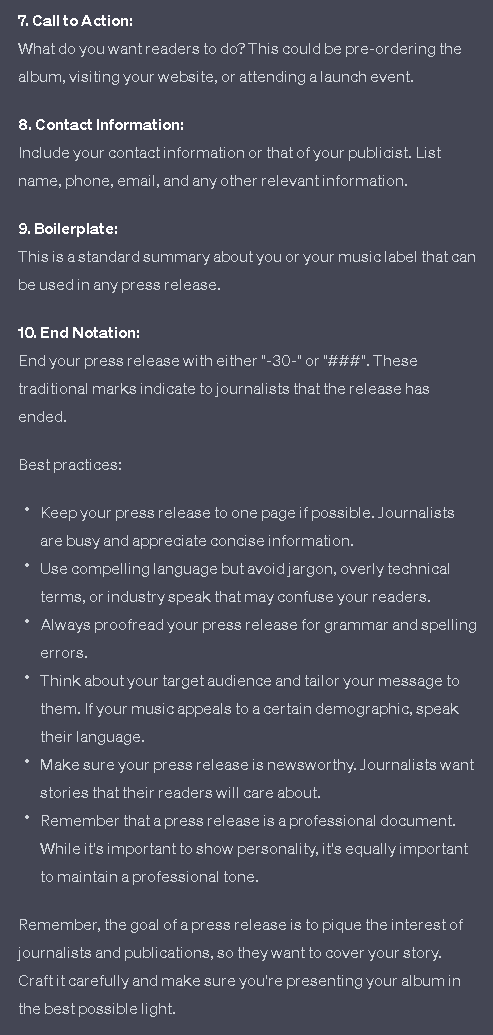

And this imprint extends beyond the realm of visuals, into another aspect of an artist's identity: writing. Words often serve as an introduction to an artist's work, shaping the audience's perception. With AI, the daunting task of crafting artist bios and press releases becomes more manageable.

A Writing Partner

Ever grappled with the task of crafting artist bios or press releases? If you’re anything like me, it's a daunting process. Not that I lack pride in my work, far from it. It’s just that the act of talking about myself and my art feels awkward. I'd rather let the work do the talking. But let's face it, bios and press releases are crucial. They provide context, serve as a guide, and shed light on the artist and their creations.

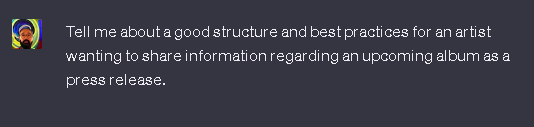

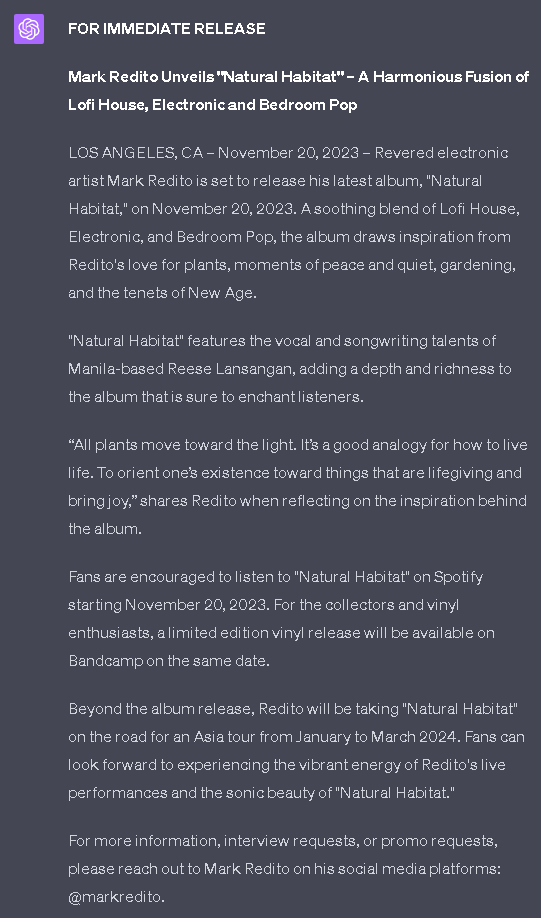

Coming once again to our rescue is ChatGPT, serving as a reliable ally for these often dreaded tasks. I want to walk you through how I use the model to draft a press release for an album I released years ago. Think of it as a time machine, with the album as our destination.

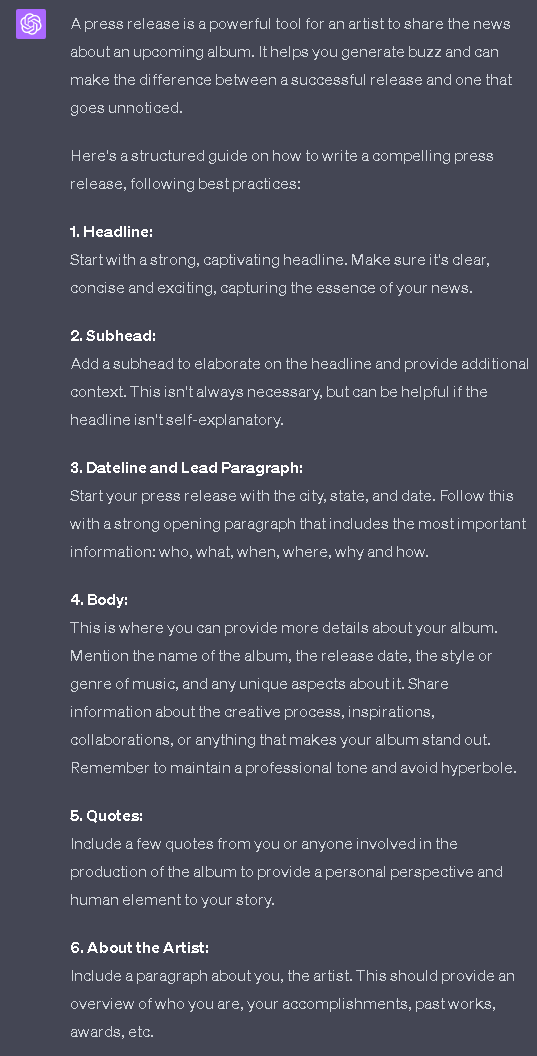

First off, I asked ChatGPT about the anatomy of a press release, those vital components that make a press release tick. This gives the model the context and framework it needs, while also enlightening us users about the essential structure of a press release.

Next, I sought ChatGPT's help to pen a press release for my album. But before it could start, I wanted it to grill me with questions, to arm itself with enough context and information.

The result? A decent press release. Granted, my writer buddies may beg to differ, but I believe with careful prompting and a bit more context, it could be even better. And for an artist like me who would rather invest time in creating than writing about creations, this press release was good to go.

Democratization of Music Creation

Let’s go back to the early days of my musical voyage. A world laid open by the welcoming arms of Audacity, a free and open-source software, my maiden Digital Audio Workstation (DAW). It illuminated the intricate dance of recording and arrangement, paving the way to fluency.

Then, the baton was passed to an early version of FL Studio, or as we knew it then, Fruity Loops. More than just a DAW, it was a wonderland teeming with software instruments—drum machines, synths, samplers, and more. Free to explore and experiment, I was unfettered by budget constraints. This low-cost wonder forged a community where fellow users were able to learn and grow from each other.

Now, let's shift the lens to Generative AI, another threshold moment in the evolution of musical exploration and expression. Easy to use, low-cost, and staggeringly powerful, it opens the gates to artists, both green and seasoned, sparking creativity in unimaginable ways. As with my earlier journey, it's democratizing the artistic landscape by lowering the barriers to entry.

I am an advocate for the democratization of art. It is a catalyst for a vibrant tapestry of artists and makers, stirring their vision and concepts. This creative cauldron has proven to be a driving force for progress in technology, culture, and society. Why not stoke these flames for the benefit of future generations?

The democratization of music tools has undoubtedly brewed a potent potion of mediocrity. However, music is a dance to the rhythm of subjectivity. Listeners are the jurors, imparting meaning and value to the tunes that resonate with their hearts.

I don’t view Generative AI as my replacement or that of my peers—at least not in the foreseeable future. It’s my accomplice, augmenting my expressions, a companion nudging me to stretch my creative boundaries.

As we contemplate the potential of AI, the thoughts of experts in the field add depth to our understanding. Once again here’s AI Music Researcher Christian Steinmetz’s interesting take:

“In mixing or audio engineering, it's a similar thing. If you're doing a job that could theoretically be automated - meaning that no one cares about the specifics of the artistic outputs, we just need it to fit some mold - then that job is probably going to be automated eventually.” But when a creative vision is being realized, the technology won’t be able to replace the decision-maker. Artists will use “the AI as a tool, but they're still sitting in the pilot's seat. They might let the tool make some decisions, but at the end of the day, they're the executive decision-maker.”

Source: AI and Music Making Pt. 1

In the spotlight of this AI stage, the artist's intent takes center stage. What's your vision? What's your plan? Amidst the vast expanse of possibilities, where do you set your compass?

Roadblocks and Revelations: AI Limitations and Challenges

AI models may be well-versed in the accumulation of human knowledge, but they aren't capable of omnipotence. While their predictive abilities might suggest otherwise, they are not mind readers. Bound by their programmed constraints, they lack the depth and diversity of information and experiences that we, as humans, possess.

Effective communication with these models is crucial. It must be grounded in clear instructions and an understanding of specific keywords, logic, and structure. For example, when you use Midjourney, knowledge of various art movements, artistic styles, medium formats, lighting configurations, and rendering styles can greatly assist you in actualizing your vision. However, despite the accuracy of the generated image, you may find some discrepancies, like improperly rendered hands, a common indicator of AI generation. Here's an enlightening video that illuminates why this occurs. To bypass these model idiosyncrasies, I dove headfirst into understanding how image data is labeled and familiarizing myself with diverse art movements, styles, and terminologies.

Language models such as ChatGPT essentially function as a sophisticated version of auto-complete; predicting the next word based on pre-established contexts. Despite its impressive capabilities, there's a propensity for these models to "hallucinate," producing illusory or incorrect information. Careful prompting and fact-checking plugins can serve as safeguards against this.

II. Expanding creative possibilities

A. New sounds and exploration of latent space

1. Comparison to crate digging and sampling (need more context)

2. Innovations in sound design with generative AI (source: https://arxiv.org/abs/2007.04868)

B. Approximating sounds in a musician's head

1. A new sound design tool that captures the artist's imagination (source: https://www.nature.com/articles/s41598-019-49501-0)

C. Customizable generative models

1. Training models on personal work for unique variations (source: https://www.vice.com/en/article/qkq33g/this-ai-trained-on-19-million-piano-songs-will-make-you-cry)

D. Generative AI as a study partner

1. Influence of listening to new and old music

2. Culture study: understanding and contextualizing musical influences (source: https://ieeexplore.ieee.org/abstract/document/8466616)

In the research of this essay, I sought the assistance of ChatGPT to source valuable references and citations. Above is an instance of its generated output, which unfortunately, was riddled with broken or inaccurate links. However, the landscape has since shifted with the introduction of recently unveiled plugins and the browsing feature. Other chatbot alternatives like Anthropic’s Claude 2 features a more recent training data cut off as well. These upgrades promise to deliver more accurate and timely information, honing the tool's efficacy.

Generative Music AI grapples with its set of challenges. User interfaces can be less than stellar or intuitive, especially when dealing with beta or open-source software. Understanding how to use notebooks like Colab or Jupyter can go a long way. Even as developers attempt to simplify interfaces, some expectation of Python code understanding exists.

Models such as Google's MusicLM and Riffusion invite creative exploration. They generate coherent musical samples, but crafting complete songs is beyond their reach. These models can ignite inspiration but lack the flexibility necessary for further song development.

As with any innovative software, a host of limitations and challenges are par for the course. Yet, in the face of these obstacles, progress marches on. The ceaseless work of many organizations and individuals are propelling the future of these AI models and tools towards more advanced, user-friendly versions.

Conclusion

We stand on the precipice of a new era, one that mirrors the pivotal moment when musicians first toyed with electronic instruments like synthesizers and drum machines back in the 70s and 80s. There was resistance, a chorus of critics deeming it 'fake music,' void of emotion. Fast-forward to today, and we find that an immense proportion of the music we consume is touched by computers.

Generative AI, as I see it, plays a similar tune. It broadens our artistic expressions, urging us to ascend to higher levels of abstract thought and ideation. Computer scientist Stephen Wolfram struck a chord during an episode of the Lex Fridman Podcast that resonates deeply with this sentiment:

"If you don't need to know the mechanics of how to tell a computer in detail… 'make this loop… set this variable… set up this array'… if you don't have to learn the 'under the hood' things, what do you have to learn? I think the answer is you need to have an idea where you want to drive the car."

Source: Lex Fridman Podcast, EP376 featuring Stephen Wolfram

When you're unshackled from the complexities of the engine, focusing instead on where you want to steer, it paves the way for a myriad of unexplored possibilities and novel artistic expressions.

I confess, my journey into this world began with skepticism and caution back in 2020. But as I started envisioning these models as extensions of my own creativity, a wave of excitement washed over me. I discovered new avenues to extend my skills, to spontaneously generate ideas and starting points. It was as if a key had unlocked a door in my mind, revealing untapped opportunities for novel creations.

This transformation in perception echoes the sentiments of Mat Dryhurst and Holly Herndon of Spawning, who eloquently state:

It is true that some aspects of rote musical production are likely to be displaced by tools that might make light work of those tasks, but that just shifts the baseline for what we consider art to be. Generally speaking artists we cherish are those that deviate from the baseline for one reason or another, and there will be great artists in the AI era just as there have been great artists in any era.

Source: AI and Music Making Pt. 1

Pondering upon this, what fresh artistic expressions will sprout from the fertile matrix of these AI models? How will they manifest, echo, and touch our consciousness? What metamorphosis awaits the artist in the cradle of AI? As I stand on the brink of this exciting expedition, I feel a flutter of anticipation for the untrodden paths that lie before us. Thus, we embark on a voyage into the boundless sea of possibilities, our unvoiced questions yearning to unfurl to the rhythm of Generative AI. We stand on the precipice of a new dawn in creativity, ready to plunge into the depths of uncharted artistry. 🌀

Special thanks to:

Nicole D’ Avis, Bas Grasmayer, Yung Spielberg, CJ Carr (Dadabots) and Shamanic for the input and feedback.

Resources

Navigating the terrain of AI can feel like solving a complex jigsaw puzzle. There might be a bit of head-scratching and a few trial-and-error moments as you piece together the workings. But if these technologies captivate you, learning how the pieces fit can pave a way for novel creations.

Consider this your starter kit. Here are some pieces that have helped me complete my AI jigsaw:

Music

The link above is a members-only database of generative AI applications, from Water and Music.